Getting HBase to perform well in a production environment is an iterative process that involves tweaking various configuration parameters. This post dives deep into optimizing HBase and Hadoop performance by focusing on hardware considerations, YARN architecture, JVM garbage collection, and system-level settings. Let’s explore how to fine-tune these components for a smoother, faster, and more reliable big data experience. More information http://hbase.apache.org/0.94/book/performance.html

Server Hardware: The Foundation of Performance

The hardware on which your HBase and Hadoop cluster runs is the bedrock of performance. The configurations we’ll discuss are based on the following server specifications:

- RAM: 48GB

- CPUs: 40 Cores

- Ethernet: 1G

These parameters will guide our configuration settings in the following sections. Note that faster ethernet (10G or faster) will significantly improve performance.

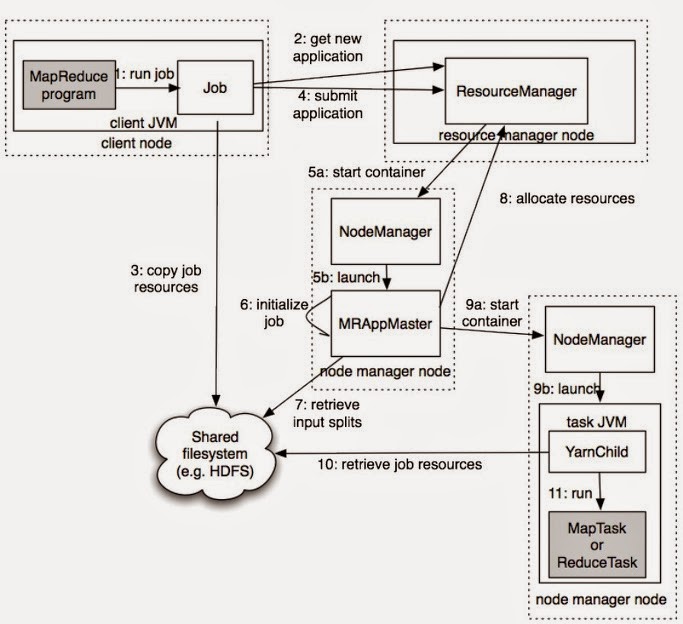

Understanding Hadoop YARN Architecture

YARN (Yet Another Resource Negotiator) is the resource management layer in Hadoop 2.0 and beyond. Understanding its workflow is crucial for optimizing resource allocation and job execution.

Courtesy: Hadoop

YARN Workflow: A Step-by-Step Breakdown

- Job Submission: The client submits a job to the ResourceManager.

- Application Request: The ResourceManager receives the application submission and allocates a Container.

- Resource Copying: Job resources are copied to a shared filesystem like HDFS.

- Application Submission to ResourceManager: The application is formally submitted.

- Container Launch via NodeManager:

- a. The NodeManager starts a Container.

- b. The MRAppMaster (MapReduce Application Master) is launched within the Container.

- Job Initialization: The MRAppMaster initializes the job.

- Input Split Retrieval: Input splits, defining the data to be processed, are retrieved.

- Resource Allocation Request: The MRAppMaster requests resources from the ResourceManager, indicating current resource usage.

- Task Container Requests: The MRAppMaster sends requests for task-specific Containers to the NodeManagers.

- a. The NodeManager starts a Container.

- b. A task JVM is launched within the Container.

- Task Resource Retrieval: The task (child) retrieves job resources from the shared filesystem (HDFS).

- Task Execution: The MapTask or ReduceTask runs within the Container.

YARN Architecture Diagram

HBase JAVA_OPTS Configuration: Taming the JVM

Cloudera’s advice on HBase memory is simple: “RAM, RAM, RAM. Don’t starve HBase.” Prioritize giving HBase ample RAM.

Also, heed Cloudera’s warning about swapping: “Watch out for swapping. Set swappiness to 0”. Swapping cripples performance.

Detailed Explanation of HBase Java Options

-XX:+ParallelRefProcEnabled: Enables parallel reference processing during garbage collection, significantly reducing GC pause times, especially during Young and Mixed GC. This can reduce GC remarking time by up to 75% and overall pause time by 30%.

-XX:-ResizePLABand-XX:ParallelGCThreads:

- Promotion Local Allocation Buffers (PLABs) are used during Young generation garbage collection. Disable PLAB resizing to avoid communication overhead between GC threads.

-XX:ParallelGCThreads=8+(logical processors-8)(5/8): This formula sets the number of threads used for parallel garbage collection. For our 40-core server:

-XX:ParallelGCThreads = 8 + (40 - 8) * (5 / 8) = 28

Recommended Java GC and HBase Heap Settings

Step 1. Allocate Sufficient Heap Memory: Edit the

$HBASE_HOME/conf/hbase-env.shfile to increase the heap size.

```bash

$ vi $HBASE_HOME/conf/hbase-env.sh

export HBASE_HEAPSIZE=16384 # 16GB

```

Step 2. Enable Garbage Collection Logging: Enable verbose GC logging to analyze garbage collection behavior.

```bash

$ vi $HBASE_HOME/conf/hbase-env.sh

export HBASE_OPTS="$HBASE_OPTS -verbose:gc -XX:+PrintGCDetails -XX:+PrintGCTimeStamps -Xloggc:/usr/local/hbase/logs/gc-hbase.log"

```

Step 3. Configure CMS Initiating Occupancy Fraction: Start the Concurrent Mark Sweep (CMS) garbage collector earlier by setting the occupancy fraction.

```bash

$ vi $HBASE_HOME/conf/hbase-env.sh

export HBASE_OPTS="$HBASE_OPTS -XX:CMSInitiatingOccupancyFraction=60"

```

Important: After making these changes, synchronize the configuration across your cluster and restart HBase.

Understanding the Configuration

Step 1 configures the HBase heap size. The default of 1GB is insufficient for most production deployments. A heap size between 8GB and 16GB is generally recommended, depending on your workload and available RAM.

Step 2 enables JVM logging. Analyzing these logs provides insights into JVM memory allocation and garbage collection processes. A solid understanding of JVM memory management is essential for interpreting the logs effectively.

```

+---------------------------+-------------------------------+-------------------+---------------+

| | | | |

| Eden | S0 | S1 | Old generation | Perm |

| | | | |

+---------------------------+-------------------------------+-------------------+---------------+

|<-------- Young Gen Space ------> |

```

The JVM heap is divided into generations: Permanent (PermGen/Metaspace), Old Generation, and Young Generation. The Young Generation comprises the Eden space and two survivor spaces (S0 and S1).

- Objects are initially allocated in the Eden space of the Young Generation.

- If allocation fails due to a full Eden space, a minor GC (Young Generation GC) is triggered, pausing all Java threads.

- Surviving objects from the Young Generation (Eden and S0) are copied to S1.

- If S1 is full, objects are promoted to the Old Generation.

- A major/full GC collects the Old Generation when promotion fails.

- The Permanent Generation and Old Generation are typically collected together.

- The Permanent Generation stores class and method definitions.

Analyzing GC Logs

Here’s an example of a healthy GC log output:

This log indicates a well-performing configuration with minimal garbage collection issues. Now, let’s examine a log with potential problems:

Before diagnosing the issue, let’s define the log elements:

<timestamp>: [GC [<collector>: <starting occupancy1> -> <ending occupancy1>, <pause time1> secs]

<starting occupancy3> -> <ending occupancy3>, <pause time3> secs]

[Times: <user time> <system time>, <real time>]

<timestamp>: Time elapsed since application start.<collector>: Internal collector name (e.g., “ParNew” for the parallel new generation collector).<starting occupancy1>: Young generation occupancy before GC.<ending occupancy1>: Young generation occupancy after GC.<pause time1>: Pause time for the minor collection.<starting occupancy3>: Entire heap occupancy before GC.<ending occupancy3>: Entire heap occupancy after GC.<pause time3>: Total GC pause time (including major collections).[Time:]: CPU time spent in user mode, system mode, and real (wall-clock) time.

Diagnosing the Problem

In the “issue” log, the first line indicates a minor GC, pausing the JVM for 0.076 seconds, reducing the Young Generation from 14.8MB to 1.6MB.

Subsequent lines show CMS GC logs, as HBase defaults to CMS for the Old Generation. CMS performs these steps:

- Initial mark (pauses application threads briefly)

- Concurrent marking (runs concurrently with the application)

- Remark (pauses application threads briefly)

- Concurrent sweeping (runs concurrently with the application)

The second line reveals that the CMS initial mark took 0.01 seconds, and concurrent marking took 6.496 seconds (no pause).

The line starting with 1441.435: [GC[YG occupancy:…] shows a pause of 0.041 seconds for remarking the heap. CMS sweeping then takes 3.446 seconds, but the heap size remains around 150MB.

The goal is to minimize these pauses. Adjust the Young Generation size using -XX:NewSize and -XX:MaxNewSize, potentially setting them to smaller values (e.g., several hundred MB). If the server has sufficient CPU power, use the Parallel New Collector (-XX:+UseParNewGC) and tune the number of GC threads for the Young Generation with -XX:ParallelGCThreads.

Placement of JVM Options: Add the above settings to the HBASE_REGIONSERVER_OPTS variable in hbase-env.sh, rather than HBASE_OPTS. This ensures the options only affect region server processes (which handle heavy data processing), leaving the HBase master unaffected.

Regarding the Old Generation and CMS, concurrent collection speed cannot typically be improved. However, start CMS earlier by setting the -XX:CMSInitiatingOccupancyFraction JVM flag to a value like 60 or 70 percent, as demonstrated in Step 3. Using CMS for the Old Generation automatically selects the Parallel New Collector for the Young Generation.

Complete Configuration Example (hbase-env.sh)

```bash

# The maximum amount of heap to use, in MB. Default is 1000.

export HBASE_HEAPSIZE=16384

# Extra Java runtime options.

# Below are what we set by default. May only work with SUN JVM.

# For more on why as well as other possible settings,

# see http://wiki.apache.org/hadoop/PerformanceTuning

export HBASE_OPTS="-XX:+UseConcMarkSweepGC"

export HBASE_OPTS="$HBASE_OPTS -verbose:gc -XX:+PrintGCDetails

-XX:+PrintGCTimeStamps -Xloggc:/opt/hbase/logs/gc-hbase.log"

export HBASE_OPTS="$HBASE_OPTS -XX:CMSInitiatingOccupancyFraction=60"

# Uncomment and adjust to enable JMX exporting

# See jmxremote.password and jmxremote.access in $JRE_HOME/lib/management to configure remote password access.

# More details at: http://java.sun.com/javase/6/docs/technotes/guides/management/agent.html

#

export HBASE_JMX_BASE="-Dcom.sun.management.jmxremote.ssl=false

-Dcom.sun.management.jmxremote.authenticate=false"

export HBASE_MASTER_OPTS="$HBASE_MASTER_OPTS $HBASE_JMX_BASE

-Dcom.sun.management.jmxremote.port=10101"

export HBASE_REGIONSERVER_OPTS="-Xms16G -Xmx16g -XX:+ParallelRefProcEnabled

-XX:-ResizePLAB -XX:ParallelGCThreads=28

$HBASE_REGIONSERVER_OPTS $HBASE_JMX_BASE

-Dcom.sun.management.jmxremote.port=10102"

export HBASE_THRIFT_OPTS="$HBASE_THRIFT_OPTS $HBASE_JMX_BASE

-Dcom.sun.management.jmxremote.port=10103"

export HBASE_ZOOKEEPER_OPTS="$HBASE_ZOOKEEPER_OPTS $HBASE_JMX_BASE

-Dcom.sun.management.jmxremote.port=10104"

export HBASE_REST_OPTS="$HBASE_REST_OPTS $HBASE_JMX_BASE

-Dcom.sun.management.jmxremote.port=10105"

```

Configuring yarn-site.xml: Resource Management

Properly configuring yarn-site.xml is essential for managing cluster resources effectively.

In our example, each machine has 48GB of RAM. We’ll reserve 40GB for YARN, leaving the rest for the OS and other services.

```xml

<property>

<name>yarn.nodemanager.resource.memory-mb</name>

<value>40960</value> <!-- 40GB in MB -->

</property>

```

This setting defines the maximum memory YARN can utilize on a node.

Next, specify the minimum RAM allocation for a Container. To allow a maximum of 20 Containers, we calculate: (40 GB total RAM) / (20 Containers) = 2 GB minimum per container.

```xml

<property>

<name>yarn.scheduler.minimum-allocation-mb</name>

<value>2048</value> <!-- 2GB in MB -->

</property>

```

YARN will allocate Containers with RAM amounts greater than or equal to yarn.scheduler.minimum-allocation-mb. You can also configure yarn.scheduler.maximum-allocation-mb to define the maximum container size. Experiment with these values to find the optimal balance for your workload.

Hadoop JAVA_OPTS Configuration

Tuning Hadoop’s Java options is as crucial as tuning HBase’s.

Set the namenode heap size to 16GB. Don’t starve the namenode of RAM, similar to HBase.

```bash

# Command specific options appended to HADOOP_OPTS when specified

export HADOOP_NAMENODE_OPTS="-Xms16G -Xmx16g

-Dhadoop.security.logger=${HADOOP_SECURITY_LOGGER:-INFO,RFAS}

-Dhdfs.audit.logger=${HDFS_AUDIT_LOGGER:-INFO,NullAppender}

$HADOOP_NAMENODE_OPTS"

```

Configure the datanode heap size. The optimal value depends on the server configuration. A heap size of 4-8GB should suffice for most datanode deployments, but for our hardware, we’ll allocate more:

```bash

export HADOOP_DATANODE_OPTS="-Xms16G -Xmx16g -Dhadoop.security.logger=ERROR,RFAS

$HADOOP_DATANODE_OPTS"

```

Set the secondary-namenode heap size similar to the namenode:

```bash

export HADOOP_SECONDARYNAMENODE_OPTS="-Xms16G -Xmx16g

-Dhadoop.security.logger=${HADOOP_SECURITY_LOGGER:-INFO,RFAS}

-Dhdfs.audit.logger=${HDFS_AUDIT_LOGGER:-INFO,NullAppender}

$HADOOP_SECONDARYNAMENODE_OPTS"

```

More information on configuring these parameters can be found in this Hortonworks blog post: How to Plan and Configure YARN

Hadoop/HBase sysctl.conf Tweaks: System-Level Optimization

Modifying system parameters via sysctl.conf and limits.conf can significantly impact Hadoop and HBase performance. These settings require root privileges.

File System

fs.file-max: Increase the number of file handles and the inode cache size. This is critical for handling the large number of files Hadoop and HBase manage.

```bash

[ahmed@server ~]# echo 'fs.file-max = 943718' >> /etc/sysctl.conf

```

Memory Management (Swappiness): Minimize swapping to disk, which severely degrades performance.

vm.dirty_ratio: Set the percentage of system memory that can be filled with “dirty” pages (pages that still need to be written to disk).vm.swappiness: Control how aggressively the kernel uses swap space. Setting this to 0 minimizes swapping.

```bash

[ahmed@server ~]# echo 'vm.dirty_ratio=10' >> /etc/sysctl.conf

[ahmed@server ~]# echo 'vm.swappiness=0' >> /etc/sysctl.conf

```

Connection Settings

net.core.netdev_max_backlog: Increase the queue length for incoming packets.net.core.somaxconn: Increase the maximum number of queued connections for listening sockets.

```bash

[ahmed@server ~]# echo 'net.core.netdev_max_backlog = 4000' >> /etc/sysctl.conf

[ahmed@server ~]# echo 'net.core.somaxconn = 4000' >> /etc/sysctl.conf

```

TCP Settings

net.ipv4.tcp_sack: Disable Selective Acknowledgments (SACKs). While SACKs can improve performance in some networks, they can sometimes introduce issues in data center environments.net.ipv4.tcp_dsack: Disable Duplicate SACKs. Similar to disabling SACKs, this can improve stability.net.ipv4.tcp_keepalive_time: Set the interval between keep-alive probes. Default: 2 hours.net.ipv4.tcp_keepalive_probes: Number of keep-alive probes to send before considering the connection broken. Default: 9.net.ipv4.tcp_keepalive_intvl: Interval between keep-alive probes. Default: 75 seconds.net.ipv4.tcp_fin_timeout: Time to keep a socket in the FIN-WAIT-2 state. Default: 60 seconds.net.ipv4.tcp_rmem: Minimum, initial, and maximum receive buffer sizes per connection.net.ipv4.tcp_wmem: Minimum, initial, and maximum send buffer sizes per connection.net.ipv4.tcp_retries2: Number of retransmissions before killing an idle TCP connection.net.ipv4.tcp_synack_retries: Number of SYN-ACK retransmissions for passive connection attempts.

```bash

[ahmed@server ~]# echo 'net.ipv4.tcp_sack = 0' >> /etc/sysctl.conf

[ahmed@server ~]# echo 'net.ipv4.tcp_dsack = 0' >> /etc/sysctl.conf

[ahmed@server ~]# echo 'net.ipv4.tcp_keepalive_time = 600' >> /etc/sysctl.conf

[ahmed@server ~]# echo 'net.ipv4.tcp_keepalive_probes = 5' >> /etc/sysctl.conf

[ahmed@server ~]# echo 'net.ipv4.tcp_keepalive_intvl = 15' >> /etc/sysctl.conf

[ahmed@server ~]# echo 'net.ipv4.tcp_fin_timeout = 30' >> /etc/sysctl.conf

[ahmed@server ~]# echo 'net.ipv4.tcp_rmem = 32768 436600 4194304' >> /etc/sysctl.conf

[ahmed@server ~]# echo 'net.ipv4.tcp_wmem = 32768 436600 4194304' >> /etc/sysctl.conf

[ahmed@server ~]# echo 'net.ipv4.tcp_retries2 = 10' >> /etc/sysctl.conf

[ahmed@server ~]# echo 'net.ipv4.tcp_synack_retries = 3' >> /etc/sysctl.conf

```

Disable IPv6 (If Not Used)

```bash

[ahmed@server ~]# echo 'net.ipv6.conf.all.disable_ipv6 = 1' >> /etc/sysctl.conf

[ahmed@server ~]# echo 'net.ipv6.conf.default.disable_ipv6 = 1' >> /etc/sysctl.conf

[ahmed@server ~]# echo 'net.ipv6.conf.lo.disable_ipv6 = 1' >> /etc/sysctl.conf

```

Apply Changes:

```bash

[ahmed@server ~]# sysctl -p

```

Update Limits (limits.conf): Increase the number of open files and processes allowed per user.

```bash

[ahmed@server ~]# echo '* - nofile 100000' >> /etc/security/limits.conf

[ahmed@server ~]# echo '* - nproc 100000' >> /etc/security/limits.conf

```

Complete Script (Run as root)

```bash

#!/bin/bash

echo "Taking sysctl.conf backup..."

cp /etc/sysctl.conf /etc/sysctl.conf.bkpz

echo "###########################################" >> /etc/sysctl.conf

echo "#########---------------------------#######" >> /etc/sysctl.conf

echo "| CUSTOM SYSCTL.CONF PARAMS |" >> /etc/sysctl.conf

echo "#########---------------------------#######" >> /etc/sysctl.conf

echo "###########################################" >> /etc/sysctl.conf

echo 'net.ipv4.tcp_sack = 0' >> /etc/sysctl.conf

echo 'net.ipv4.tcp_dsack = 0' >> /etc/sysctl.conf

echo 'net.ipv4.tcp_keepalive_time = 600' >> /etc/sysctl.conf

echo 'net.ipv4.tcp_keepalive_probes = 5' >> /etc/sysctl.conf

echo 'net.ipv4.tcp_keepalive_intvl = 15' >> /etc/sysctl.conf

echo 'net.ipv4.tcp_fin_timeout = 30' >> /etc/sysctl.conf

echo 'net.ipv4.tcp_rmem = 32768 436600 4194304' >> /etc/sysctl.conf

echo 'net.ipv4.tcp_wmem = 32768 436600 4194304' >> /etc/sysctl.conf

echo 'net.ipv4.tcp_retries2 = 10' >> /etc/sysctl.conf

echo 'net.ipv4.tcp_synack_retries = 3' >> /etc/sysctl.conf

echo 'net.ipv6.conf.all.disable_ipv6 = 1' >> /etc/sysctl.conf

echo 'net.ipv6.conf.default.disable_ipv6 = 1' >> /etc/sysctl.conf

echo 'net.ipv6.conf.lo.disable_ipv6 = 1' >> /etc/sysctl.conf

echo 'vm.dirty_ratio = 10' >> /etc/sysctl.conf

echo 'vm.swappiness = 0' >> /etc/sysctl.conf

echo 'fs.file-max = 943718' >> /etc/sysctl.conf

echo "Making changes Permanent - Executing `sysctl -p` command..."

sysctl -p

echo "############################################################"

echo "UPDATE COMPLETE"

echo "############################################################"

```

Extras: Monitoring JVM with JMX

Monitoring the JVM is crucial for proactive performance management. This section briefly covers enabling JVM monitoring via JMX.

For detailed instructions on setting up JVM monitoring with Zabbix, refer to this guide: zabbix_java_gateway

This option enables JVM for monitoring:

```

-Dcom.sun.management.jmxremote.ssl=false -Dcom.sun.management.jmxremote.authenticate=false

```

This option sets the JMX port for remote monitoring:

```

-Dcom.sun.management.jmxremote.port=10101

```

Important Considerations for Production Environments:

- Security: The

-Dcom.sun.management.jmxremote.authenticate=falseoption disables authentication, making your JMX endpoint vulnerable. Never use this in a production environment. Configure proper authentication and SSL encryption for JMX. - Firewall: Ensure your firewall allows access to the JMX port only from authorized monitoring servers.

Useful Links

- Linux Kernel Networking Documentation

- Linux Kernel Sysctl Documentation

- HBase Administration and Performance Tuning (Packt)

- Tuning Java Garbage Collection for HBase (Intel)

- JVM Parameter MaxNewSize (Stack Overflow)

- Configuring the Default JVM and Java Arguments (Oracle)

- HBase Performance Tuning (Cloudera Archive)

- Big SQL InfoSphere Tuning (IBM)

- Hadoop Performance Tuning (Apache Wiki)

- Apache HBase Book

This post provides a starting point for optimizing HBase and Hadoop performance. Remember that the optimal configuration will vary depending on your specific hardware, workload, and data characteristics. Continuous monitoring and experimentation are key to achieving peak performance in your big data environment.